At this point you might be wondering, what information are computers extracting in image recognition and how do computers actually extract information from pixels?

The ability of humans to process visual information in as little as 150 miliseconds is just outstanding. Humans naturally are able to distinguish

between different objects or different landscapes in their field of vision (e.g. beach and ocean) and also recognise, what objects they see. Imitating this ability

is a large research area in artificial intelligence and computer vision. Recent advancements in computing power and algorithm design have produced astonishing results. A neural network

by Szegedy et al. called Inception v4 reached a top 1 error rate of 20.0% and a top 5 error rate of 5.0%, in a dataset (ILSVRC-2012) with over 1000 different classes (dog, flower, car etc.).

This means it classifies objects in images 4 out of 5 times to the exact correct class, and looking at the 5 most probable classes the neural network outputs, it has a 95%

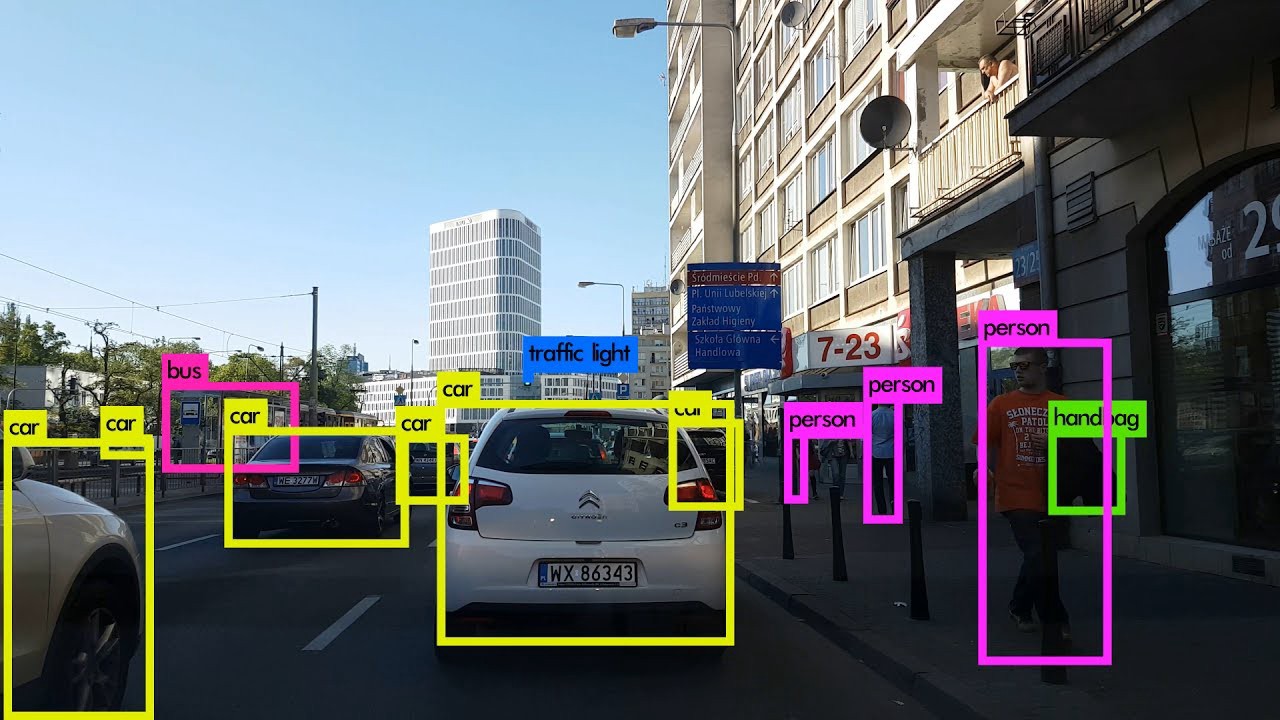

accuracy containg the exact correct class in those 5 classes. Also the neural network called YOLOv3, which can even be run in real-time, shows impressing results in localizing and classifying objects:

At this point you might be wondering, what information are computers extracting in image recognition and how do computers actually extract information from pixels?

Image recognition by computers can be divided in several subcategories and varies a bit by definition. We're going to look at the two main classes of image recognition: Image classification and image segmentation. Image classification is the task, where the output is a class. So it will for example output "dog", if there's a dog in the image, or "cat", "bird" and so on (not restricted to animals). There's also the possibility to output multiple classes, if several recognizable objects are in the image. Further, image segmentation does not only look at the entity of the pixels in an image, as seen in image classification, but image segmentation even assigns each pixel to a class. This is a powerful concept to distinguish between different segments in images and localizing objects in images. Partitioning the image into different segments can be done via several methods, such as thresholding, edge detection or with deep learning methods.

But how does all of this actually work? In the following, we're going to focus on deep learning methods for image classification and segmentation.

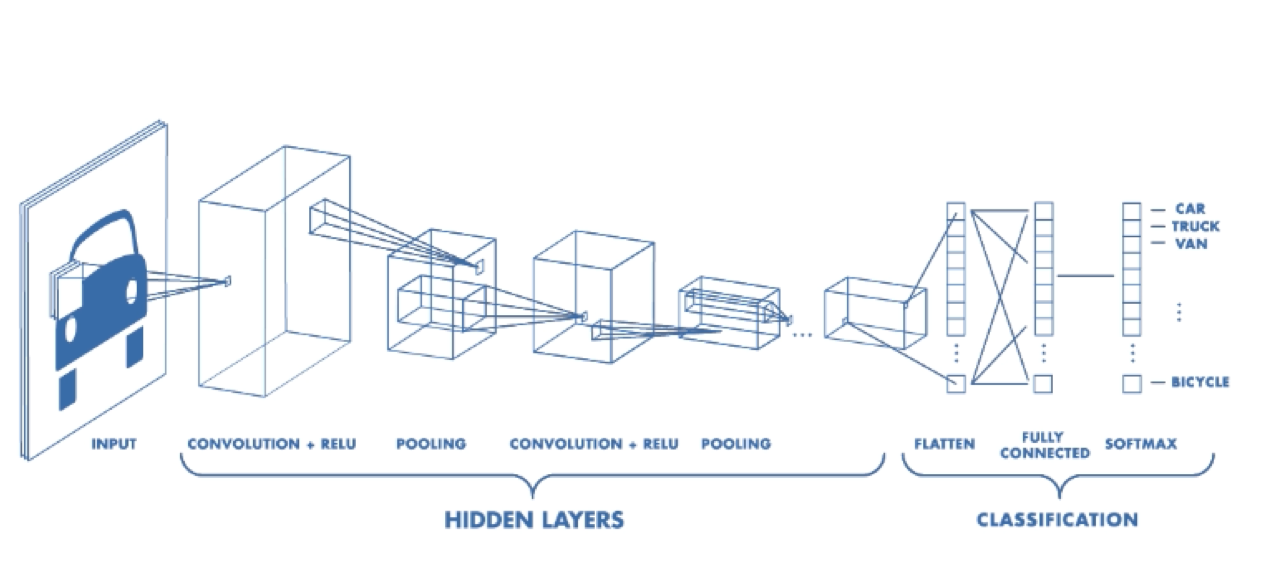

A common class of neural network used for image recognition tasks is called Convolutional Neural Network (CNN). The goal of CNNs is to extract high level features (shapes, lines etc.) from low level

features (pixels) by applying a series of mathematical operations. The idea is to extract information/features, that characterize and resemble objects as closely as possible.

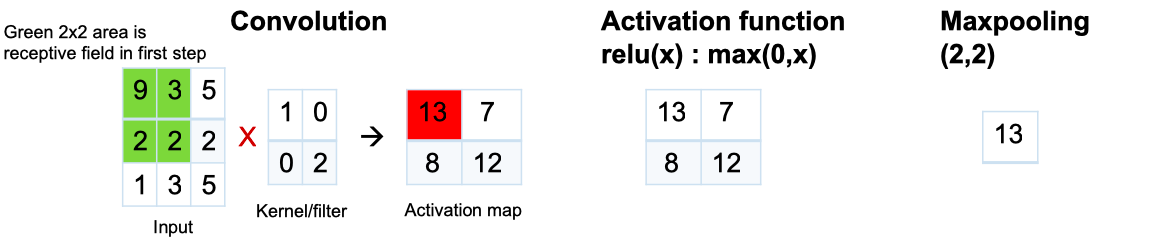

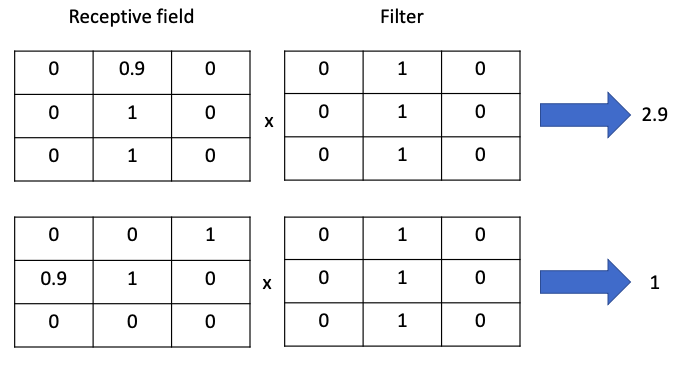

A usual way of going from low level features to high level features (or also called high resolution feature), is that the image is passed to a convolutional layer, which uses a filter/kernel (e.g. a size of 5x5, 3x3 or 2x2 pixels)

and convolves/runs over the whole image. The input area to the filter is called receptive field and is multiplied element wise with the filter

and then summed up. When this is done for all receptive fields, it leaves a new array, called the feature or activation map. Afterwards, a maxpooling layer is applied, which also convolves over the

feature map and gives back the highest value for each receptive field of the activation map. Maxpooling is used to avoid overfitting and reduce the sample size, while retaining as much information as possible.

An example with input dimension 3x3 pixels can be found in the a simplified example below:

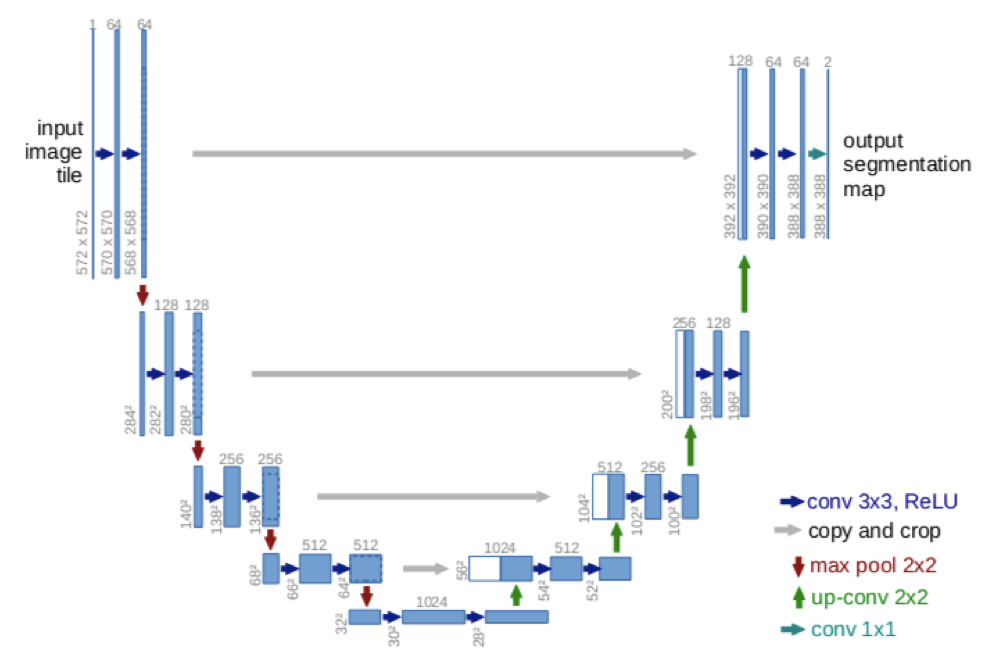

For image segmentation, the feature extraction part is the same as in image classification, but as the desired output is not a class, but rather an image with the segment regions, it is necessary to upsample

the high level feature maps (which through the maxpooling have become smaller regarding the dimensions than the original image). Upsampling will increase resolution in each step and after several steps give us

back an output image, which has the same dimensions as the input image, but with the desired segmentation. This can be done with transposed convolutions, which is basically the reverse operation of a

convolution. One popular network used for image segmentation is Unet, shown in the figure below:

So as you can see after this introduction, image recognition and CNNs rely on a lot of math!

Sources:

[1]: https://towardsdatascience.com/yolo-v3-object-detection-53fb7d3bfe6b

[2]: https://www.mathworks.com/videos/introduction-to-deep-learning-what-are-convolutional-neural-networks--1489512765771.html

[3]: Ronneberger, Olaf; Fischer, Philipp; Brox, Thomas (2015). "U-Net: Convolutional Networks for Biomedical Image Segmentation". arXiv:1505.04597